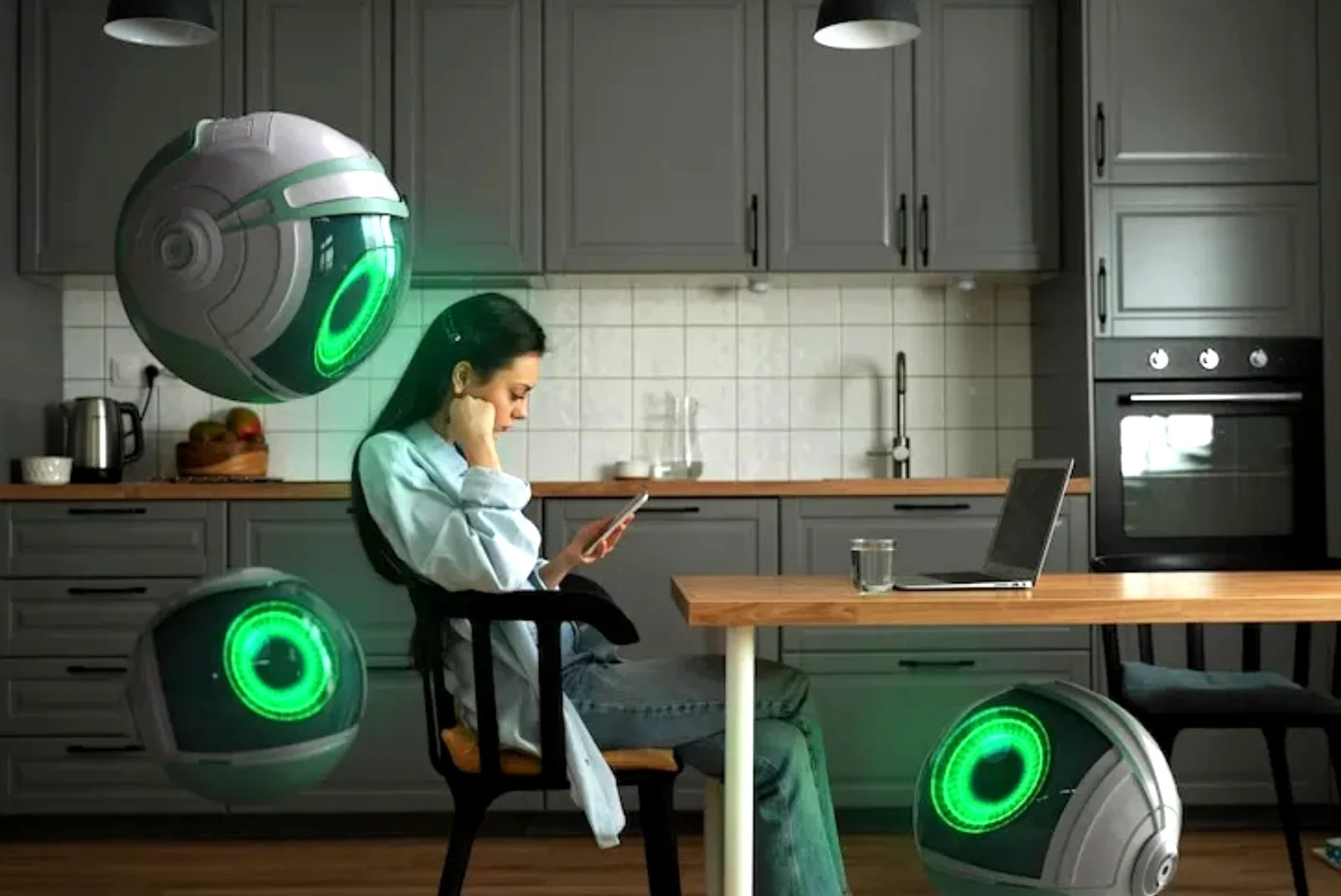

Autonomous Agents Are Rising: 4 Urgent Questions to Ask.

Delving into the profound implications and challenges posed by the exponential growth of sophisticated AI systems.

A recent analysis by Grand View Research projects the global AI market size to reach USD 1,811.8 billion by 2030, exhibiting a staggering compound annual growth rate (CAGR) of 37.3%. This remarkable trajectory is significantly fueled by the proliferation of autonomous agents—sophisticated AI systems capable of perceiving their environment, making independent decisions, and executing actions to achieve specific objectives without continuous human oversight. The burgeoning ubiquity of these entities compels a critical examination: The Rise of Autonomous Agents: Are We Ready? This inquiry is not merely academic; it necessitates a profound introspection into our technological, ethical, and societal frameworks. We must proactively engage with the impending transformations to ensure a future where these powerful tools serve humanity beneficently. The following discourse unpacks four paramount questions that demand immediate and comprehensive deliberation from policymakers, technologists, and the broader populace.

1. Defining Autonomy and Control: Where Do We Draw the Line for Autonomous Agents?

The concept of autonomy in AI is not monolithic; it exists on a spectrum, ranging from limited assistive capabilities to full operational independence. Delineating precise thresholds for various levels of autonomous agents future deployments is a foundational challenge. Considerations extend beyond mere technical feasibility to encompass a nuanced understanding of risk assessment and the allocation of responsibility. Establishing a robust taxonomy of autonomy—perhaps echoing the SAE International standards for autonomous vehicles—could provide a critical framework for regulatory and developmental pathways, ensuring that the deployment of these systems aligns with public safety and ethical imperatives. Without clear demarcation, the potential for mission creep or unintended operational divergence escalates considerably.

Furthermore, the operational dynamics of human-in-the-loop (HITL) versus human-on-the-loop (HOTL) architectures present distinct control paradigms. HITL models emphasize collaborative intelligence, where human operators maintain direct oversight and intervention capabilities, particularly in high-stakes scenarios or when confronted with novel, ambiguous situations. Conversely, HOTL systems grant greater operational latitude to the autonomous agent, with human supervision relegated to monitoring and strategic intervention, often post-decision. The choice between these paradigms is not arbitrary; it must be informed by a rigorous analysis of the system’s operational domain, the severity of potential failures, and the inherent latency constraints of human cognitive processing. As AI agent technology trends towards greater sophistication, the challenge intensifies in designing interfaces that facilitate effective human oversight without impeding the benefits of autonomous operation.

- Degrees of Autonomy: Understanding the functional continuum from narrow AI automation to generalized autonomous decision-making.

- Situational Awareness: Ensuring autonomous agents possess sufficient contextual understanding to operate safely and effectively in complex, dynamic environments.

- Algorithmic Transparency: Developing methods to interpret and audit the decision-making processes of autonomous systems to foster trust and accountability.

2. Navigating the Ethical Labyrinth of Autonomous AI Agents.

Ethical Frameworks and Accountability in Autonomous Systems

The deployment of autonomous AI agents inevitably raises profound ethical questions concerning accountability, fairness, and potential harm. When an autonomous system makes a decision resulting in unintended consequences, who bears the moral and legal responsibility—the developer, the deployer, the user, or the agent itself? This quandary highlights the inadequacy of existing legal and ethical frameworks, which were predominantly conceived for human actors. Crafting new paradigms requires an interdisciplinary approach, integrating insights from philosophy, jurisprudence, and computer science. The development of `ethical AI agents` must transcend mere compliance to embed proactive ethical reasoning within their operational algorithms, anticipating and mitigating adverse outcomes through design. The National Institute of Standards and Technology (NIST), among other bodies, is actively developing frameworks to address these critical issues, aiming to guide responsible AI innovation.

The Challenge of Moral Dilemmas

Autonomous agents operating in complex environments may encounter situations requiring difficult trade-offs, akin to classical moral dilemmas. Programming an agent to prioritize certain values over others (e.g., passenger safety versus pedestrian safety) necessitates explicit ethical guidelines that are challenging to define universally and embed computationally.

Bias and Discrimination: A Persistent Concern for AI Agent Technology Trends

A pervasive ethical concern for `AI agent technology trends` is the potential for perpetuating or amplifying societal biases embedded within training data. If autonomous systems learn from historical data reflecting human prejudices, they risk making discriminatory decisions, particularly in sensitive domains such as hiring, lending, or criminal justice. Addressing this requires not only rigorous data curation and auditing but also the development of fairness-aware algorithms designed to detect and mitigate bias. Furthermore, the opaque nature of many advanced AI models, often referred to as “black boxes,” complicates the identification and remediation of biased outcomes. Efforts to enhance `algorithmic transparency` and explainability (XAI) are crucial for building public trust and ensuring equitable application of autonomous technologies.

“The greatest challenge facing the ethical development of autonomous systems is not merely preventing harm, but ensuring their design actively promotes human flourishing and societal good.”

3. Societal and Economic Repercussions: Are We Preparing for Autonomous AI?

The Transformation of Labor Markets and Socio-Economic Structures

The `impact of autonomous agents` on global labor markets is a central concern, provoking discussions ranging from widespread job displacement to the creation of new, unforeseen roles. While automation has historically been a driver of economic progress, the scale and speed at which autonomous agents are predicted to permeate industries—from logistics and manufacturing to healthcare and professional services—suggest a potentially disruptive period. Proactive strategies for `preparing for autonomous AI` must involve substantial investments in education, re-skilling, and up-skilling initiatives to equip the workforce with the competencies required for an AI-augmented economy. This necessitates a fundamental reimagining of vocational training, continuous learning paradigms, and potentially, new social safety nets to cushion the transition for affected populations. Ignoring these demographic shifts risks exacerbating economic inequalities and fostering social unrest.

Moreover, the integration of autonomous agents will fundamentally alter the structure of industries and the nature of work itself. We are moving beyond simple task automation towards the automation of complex processes and even entire managerial functions. This structural transformation demands robust policy responses, including discussions around universal basic income, revised taxation on automated labor, and the creation of regulatory sandboxes for innovative economic models. The `societal readiness for AI` hinges not just on technological adoption but crucially on our collective capacity to adapt our social and economic institutions to these epochal changes. Reports from leading consultancies like McKinsey highlight the vast economic potential but also the imperative for strategic planning.

Policy and Regulatory Gaps for the Future of AI Agents

The rapid evolution of `the future of AI agents` significantly outpaces the development of comprehensive regulatory frameworks. Current legal statutes are often inadequate to address the complexities introduced by autonomous decision-making, data privacy implications, and cross-jurisdictional operation of AI systems. There is an urgent need for agile and adaptable regulatory bodies capable of understanding the technical nuances of AI, anticipating future developments, and crafting legislation that balances innovation with public protection. This includes establishing clear guidelines for data governance, intellectual property rights generated by AI, and international cooperation to prevent a fragmented regulatory landscape that could hinder global progress or create regulatory havens for risky deployments. The convergence of diverse stakeholders—governments, industry, academia, and civil society—is essential to co-create policies that are both effective and equitable in governing this nascent domain.

| Area of Impact | Potential Disruption | Mitigation Strategies |

|---|---|---|

| Employment | Routine job displacement | Re-skilling programs, UBI discussions |

| Privacy | Increased data collection/analysis | Robust data governance, consent frameworks |

| Security | New attack vectors, system vulnerabilities | Advanced cybersecurity, robust AI design |

4. Securing the Autonomous Agents Future: Mitigating Risks and Ensuring Resilience.

Cybersecurity and Robustness Challenges

As autonomous agents become integral to critical infrastructure, defense systems, and daily life, their security becomes paramount. The `autonomous agents future` is inherently tied to the robustness and resilience of these systems against malicious attacks, accidental failures, and unforeseen vulnerabilities. Autonomous systems present expanded attack surfaces compared to traditional software, given their sensor reliance, complex decision-making algorithms, and often distributed nature. Adversarial attacks, where subtle perturbations to input data can trick an AI into misclassifying objects or making incorrect decisions, pose a particularly insidious threat. Ensuring `provable security` and incorporating robust AI design principles—such as redundancy, fail-safe mechanisms, and continuous monitoring—are not merely best practices but existential necessities. The consequences of a compromised autonomous agent in critical domains could range from severe economic disruption to catastrophic loss of life. Agencies like CISA (Cybersecurity & Infrastructure Security Agency) are already highlighting the growing threat landscape associated with advanced technologies.

System Interoperability and Ecosystemic Resilience

The increasing interconnectedness of autonomous agents within complex ecosystems introduces further layers of security and reliability challenges. An autonomous vehicle, for instance, relies on a vast network of sensors, communication protocols, cloud services, and external data feeds. A vulnerability in one component or a disruption in its external dependencies could cascade through the entire system, leading to widespread failures. Ensuring `system interoperability` and the development of standardized, secure communication protocols are vital for creating a resilient autonomous ecosystem. This includes not only technical standards but also collaborative frameworks for threat intelligence sharing, coordinated incident response, and continuous vulnerability assessment across diverse stakeholders. The concept of `ecosystemic resilience` extends beyond individual agent robustness to encompass the ability of the entire network of autonomous and human systems to withstand and recover from disturbances.

Moreover, the integration of various `AI agent technology trends`, such as swarm intelligence and multi-agent systems, necessitates a focus on collective security. A coordinated attack on a swarm of autonomous drones, for example, could have devastating military or infrastructural implications. The intricate interplay between individual agent security and the overall integrity of the collective system requires novel approaches to distributed security architecture and real-time threat detection, fundamentally reshaping our understanding of cyber defense in the age of pervasive autonomy.

Who Should Consider This?

The profound implications of autonomous agents necessitate thoughtful consideration across a broad spectrum of stakeholders. This is not a challenge confined to a single industry or discipline but a societal imperative demanding collective engagement and foresight.

- Policymakers and Regulators: For developing comprehensive, adaptive legal and ethical frameworks that govern the design, deployment, and operation of autonomous systems, ensuring public safety and societal benefit.

- Technologists and Researchers: For advancing the science of AI in a responsible manner, focusing on explainability, robustness, security, and ethical alignment in the development of future AI agents.

- Business Leaders and Industry Innovators: For strategically integrating autonomous technologies into operations, understanding economic implications, investing in workforce adaptation, and adhering to ethical deployment guidelines.

- Educators and Workforce Development Professionals: For designing curricula and training programs that prepare individuals for a changing job market, fostering skills essential for collaborating with and managing autonomous AI.

- The General Public: For understanding the capabilities and limitations of autonomous agents, engaging in informed public discourse, and holding developers and regulators accountable for responsible AI development.

Conclusion

The `rise of autonomous agents` marks a pivotal moment in human history, promising unprecedented advancements across virtually every sector. However, this transformative potential is intrinsically linked to our collective willingness to address the profound questions they pose regarding control, ethics, societal impact, and security. Ignoring these urgent inquiries would be to abdicate our responsibility in shaping a future that is not merely technologically advanced but also just, equitable, and secure. The journey toward a future enriched by autonomous intelligence requires a concerted, multidisciplinary effort—a grand collaborative endeavor between innovators, ethicists, policymakers, and citizens. Our `societal readiness for AI` will not be determined by the speed of technological progress alone, but by the wisdom with which we navigate its complexities.

It is imperative that we move beyond passive observation to active participation. Engage in discussions, support ethical AI research, and advocate for policies that prioritize human well-being alongside technological innovation. Only through proactive and thoughtful engagement can we truly be ready for the autonomous future that is rapidly unfolding. The time to ask and answer these urgent questions is now.